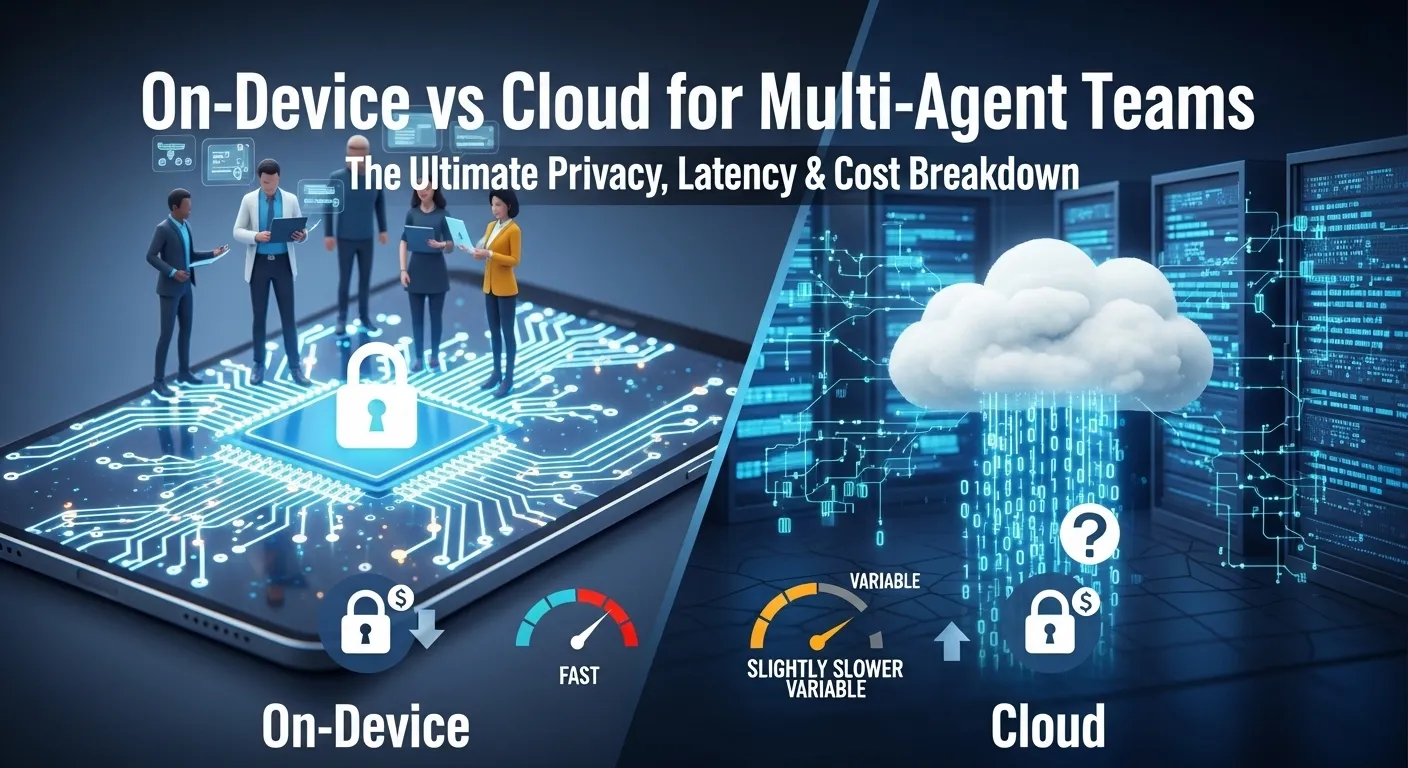

Your AI agents are ready to work together—but where should they live? The choice between on-device processing and cloud computing could mean the difference between blazing-fast responses and embarrassing data leaks. Let’s break down the real trade-offs.

What Are Multi-Agent Teams?

Imagine having a specialized team of AI assistants working together—one handles research, another manages scheduling, while a third analyzes data. Multi-agent teams are exactly that: coordinated AI systems where different agents collaborate to solve complex tasks. The big question isn’t whether to use them, but where to host them.

How Processing Location Changes Everything

When your AI agents process data on-device, everything happens locally on your hardware—no internet required. Think of it like having a personal assistant who never leaves your office. Cloud processing, meanwhile, sends your data to remote servers, essentially outsourcing the thinking to powerful data centers across the globe.

The location decision creates ripple effects across privacy, speed, and budget that can make or break your AI implementation.

Key Benefits & Use Cases

- On-Device Privacy Fortress: Sensitive industries like healthcare and legal services benefit from zero data transmission—your secrets stay local

- Cloud Scalability Power: E-commerce and customer service teams can handle thousands of simultaneous interactions without hardware limitations

- Hybrid Flexibility: Financial analysts use on-device for sensitive calculations while leveraging cloud for data-heavy research

Cost Analysis: The Real Numbers

On-device requires upfront hardware investment ($2,000-$10,000 for capable workstations) but minimal ongoing costs. Cloud services like AWS or Azure charge per API call—typically $0.50-$5 per 1,000 requests for AI services. For teams processing millions of requests monthly, cloud costs can quickly surpass $10,000, while on-device remains predictable after the initial outlay.

The break-even point typically occurs around 2-3 million monthly interactions, making on-device more economical for high-volume, predictable workloads.

US & EU Regulatory Landscape

In Europe, GDPR compliance makes on-device processing increasingly attractive—data never leaves the device, eliminating cross-border transfer concerns. US healthcare organizations facing HIPAA requirements similarly favor local processing for patient data. Meanwhile, California’s CCPA and emerging state privacy laws are pushing more companies toward privacy-first architectures that minimize data exposure.

On-Device vs Cloud: Head-to-Head

- On-Device Processing: Maximum privacy, zero latency after setup, predictable costs, but limited by hardware and harder to update

- Cloud Processing: Unlimited scalability, always-current AI models, pay-as-you-go pricing, but vulnerable to outages and data exposure risks

- Hybrid Approach: Sensitive tasks on-device, heavy lifting in cloud—best of both worlds but requires sophisticated architecture

Choosing Your Strategy: 5-Step Framework

- Assess Your Data Sensitivity: Classify what absolutely cannot leave your premises versus what’s acceptable for cloud processing

- Calculate True Costs: Model both scenarios over 3 years—include hardware, maintenance, and potential cloud scaling

- Test Real-World Latency: Run pilot projects measuring response times for your specific use cases

- Evaluate Team Skills: On-device requires more technical expertise—do you have the in-house capability?

- Plan for Growth: Choose architecture that supports your 2-year roadmap without requiring complete rebuilds

FAQs

Can I switch from cloud to on-device later?

Yes, but it’s like moving from renting to owning a home—significant upfront investment and migration effort. Plan your architecture with future flexibility in mind.

How much latency improvement does on-device provide?

Typically 200-500ms faster per interaction since you’re eliminating round-trip network delays. For real-time applications, this feels instantaneous versus noticeable lag.

Is hybrid approach more expensive?

Initially yes—you’re maintaining both infrastructures. But for organizations with mixed sensitivity needs, the operational benefits often justify the complexity.

Bottom Line

There’s no one-size-fits-all answer, but there is a right answer for your specific needs. High-privacy, high-volume operations lean toward on-device, while rapidly scaling startups often start with cloud. The smartest teams are building flexible architectures that can leverage both—because in the world of AI teamwork, location really is everything.